On This Page: [hide]

Are you getting the most out of your website’s SEO efforts? Google Search Console (GSC) is a powerful tool that sheds light on how your site performs in search results, providing crucial insights to drive more organic traffic your way.

This article dives into the indispensable role of GSC in enhancing your website’s visibility, helping you tackle common glitches, and optimizing your content strategy for better user engagement and search rankings. By understanding and leveraging the full suite of features GSC offers, from monitoring search traffic to fixing crawl errors, you can ensure your site not only meets but excels in today’s competitive online space.

The Role of Google Search Console in SEO

Google Search Console (GSC) is an instrumental tool in the SEO toolkit, offering great insights into the performance of websites in Google’s search results. Understanding its role is central to maximizing your site’s search engine optimization, ensuring improved visibility and driving more organic traffic to your site.

What Does Google Search Console Do?

At its core, Google Search Console helps webmasters understand how Google views their website. It provides a comprehensive rundown of how your site appears in search results, along with the performance metrics such as click-through rates and rankings for specific keywords.

It alerts you to any issues that might prevent your site from being indexed or featured prominently in search results, such as crawl errors, mobile usability issues, and security problems. Plus, it offers actionable insights on how to resolve these issues, making it an indispensable resource for maintaining an optimized web presence.

Moreover, GSC assists in monitoring your site’s search traffic, understanding how users find your website, and what content appeals to them the most. This allows webmasters to refine their content strategy, focusing on what works best and identifying areas for improvement.

Why Every Webmaster Needs Google Search Console

- Improved Site Visibility: By identifying and addressing the issues highlighted by GSC, you can significantly improve your site’s visibility in search results. This includes optimizing your site for mobile users, ensuring a secure browsing experience, and improving the overall site structure.

- Insightful Analytics: GSC offers detailed analytics on your site’s performance in search results, including the search queries that bring users to your site, the pages that get the most traffic, and how your site appears in search. These insights can guide your content strategy and SEO efforts, focusing on what brings the most value to your audience.

- Enhanced User Experience: By utilizing the feedback and data from GSC, you can create a more user-friendly website. Addressing issues like mobile usability and site speed not only benefits your search engine rankings but also improves the user experience, leading to higher engagement and conversion rates.

- Direct Communication with Google: Google Search Console is essentially a direct line of communication with Google. It’s where you receive notifications directly from Google about issues on your site, where you can submit sitemaps for better indexing, and where you can request re-indexing of new or updated content. This direct interaction ensures that your site is promptly evaluated and represented in search results.

Ultimately, Google Search Console is an invaluable asset for anyone responsible for a website’s performance. Its insights and tools are crucial for diagnosing issues, improving site ranking, and understanding how to cater to your site’s audience effectively. Engaging with the data and resources provided by GSC is one of the most direct ways to enhance your site’s SEO and ensure that your content reaches its intended audience.

Common Glitches in Google Search Console You Should Know

Google Search Console is a crucial tool for website owners and SEO experts, helping to highlight issues that could affect a site’s visibility and performance. Among these, several common errors can hinder your site’s relationship with Google’s search index. Understanding these errors is the first step to maintaining a healthy and accessible website.

Exploring the Various Types of Google Search Console Errors

When diving into Google Search Console, you might encounter a variety of errors, each indicating a different issue with how Google views and interacts with your site. These errors can range from crawl issues to security problems. Being aware of these issues allows webmasters to take corrective action, ensuring their site remains friendly to both users and search engines.

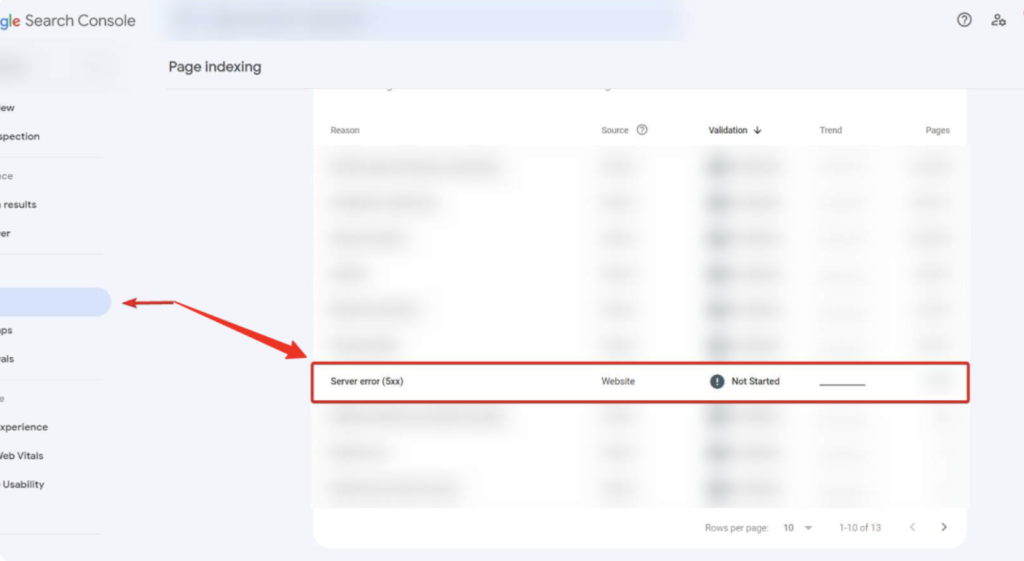

Understanding Server Errors (5xx) and Their Impact

Server errors, denoted by 5xx status codes, signal issues with your website’s server. They mean that when Googlebot tried to access a page, the server couldn’t fulfill the request, potentially due to an overload or maintenance issue. Such errors can severely impact your site’s indexing and user experience. To resolve them, check your server’s load and reliability. Ensure up-to-date software and sufficient resources to handle traffic spikes. If issues persist, consider contacting your hosting provider or a web developer for further investigation.

The Mystery Behind 404 and Soft 404 Errors

A “404 Not Found” error occurs when Googlebot attempts to access a page that doesn’t exist. This might happen if a page is deleted or moved without proper redirection. A “Soft 404” occurs when a non-existent page displays a custom error page without returning the HTTP 404 status code.

Both scenarios can frustrate users and waste Googlebot’s crawl budget. To fix these, use 301 redirects to guide users and bots to relevant pages, remove links to non-existent pages, and ensure your custom error pages return the correct 404 status code.

Handling Unauthorized Request Errors (401)

Unauthorized Request errors, signaled by the 401 status code, mean a page requires a login or other authentication to access, and Googlebot can’t get through. These are common with membership sites or content behind paywalls. While these errors are sometimes intentional, they can limit content indexing.

If these pages should be public, check your site’s privacy settings and server configurations to ensure they’re accessible. For content meant to be restricted, consider providing a summary or snippet in the open-access portion of your site to help Google understand the content’s context.

Step-by-Step Guide on Fixing Server Errors (5xx)

Facing a server error (5xx) can be frustrating, but addressing it promptly ensures your website remains user-friendly and retains its search engine rankings. Here’s how to tackle these errors effectively:

- Review Recent Changes: Sometimes, a server error can emerge following recent updates on your website. If you’ve implemented new changes or updates, consider reverting them temporarily to see if the issue resolves.

- Check Server Resources: Verify that your server has enough CPU, memory, and disk space to handle requests. Insufficient resources can often lead to these errors. If needed, upgrade your server or hosting plan to accommodate your website’s demands.

- Contact Hosting Support: If you’re unsure about the root cause of the error, reaching out to your hosting provider is a wise step. They have the expertise to diagnose and resolve server-related issues effectively.

- Request a Re-crawl: Once you’ve addressed the underlying issue, ask Google to re-crawl the affected pages. This can be done through Google Search Console by submitting the updated URLs for indexing.

By following these steps, you’ll not only fix the server error but also prevent potential negative impacts on your SEO performance.

Diagnosing and Resolving 404 Not Found Errors

“404 Not Found” errors occur when a server can’t find the requested page. This usually happens if a page is deleted or moved without proper redirection. Solving these errors is crucial for maintaining a smooth user experience and site efficiency. Here’s how to tackle them:

- Identify the Errors: Use tools like Google Search Console to find URLs that return a 404 error.

- Restore Mistakenly Deleted Pages: If a page was deleted by accident, restoring it from a backup is the easiest fix.

- Implement 301 Redirects: For intentionally removed or relocated pages, setting up a 301 redirect to a relevant page helps preserve the user experience and SEO value.

Addressing these errors swiftly ensures that your visitors and search engine crawlers can navigate your site effectively.

Best Practices for Redirect and Soft 404 Errors

Soft 404 errors are misleading both to users and search engines, indicating an existing page that isn’t truly available. Redirect errors occur when the redirect implementation is faulty or leads to an irrelevant page. To manage these errors:

- Avoiding Misleading Redirects: Ensure all redirects point to content that is as close as possible to what the original page offered. This preserves a positive user experience and SEO strength.

- Correcting Soft 404s: Regularly monitor your website for soft 404 errors using tools like Google Search Console, and correct them by either restoring the missing page, if it was deleted by mistake, or setting up proper redirects or a 410 response if the page is gone for good.

- Maintaining Clear Navigation: Keep your site’s navigation clear and ensure all links direct to valid, active pages. Regularly updating your sitemap and submitting it to search engines can help minimize indexing of nonexistent pages.

Adhering to these best practices helps in maintaining the health of your website, improving user experience, and ensuring your site remains favored by search engines.

Tackling Indexation Issues and Blocked Resources

Fixing indexation issues and managing blocked resources are crucial steps in ensuring your website is accurately represented in search engine results. Understanding the common causes and remedies can significantly enhance your site’s visibility and functionality.

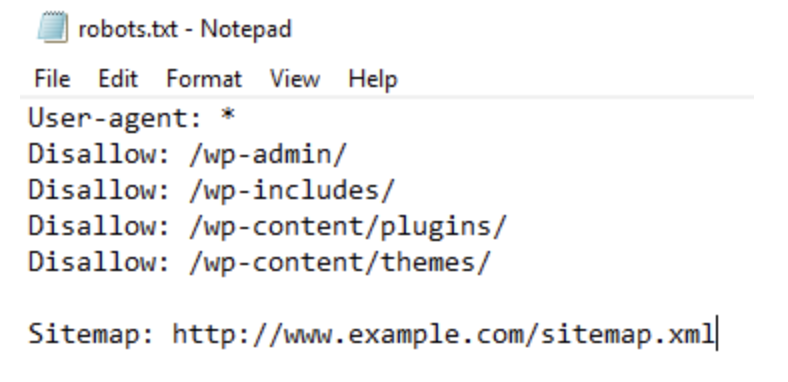

The Right Way to Deal with Pages Blocked by Robots.txt

Robots.txt files play a pivotal role in guiding search engine bots through your website’s content. If you’ve discovered that certain pages are unintentionally blocked, it’s essential to address this promptly to avoid impacting your site’s SEO performance.

Follow these steps to correct issues caused by robots.txt files:

- Review Your Robots.txt File: Begin by checking the robots.txt file for any ‘Disallow’ directives that may be unintentionally blocking important pages. Each ‘Disallow’ line instructs search engines to avoid crawling the specified path.

- Modify the Disallow Directives: If you find a directive blocking a page that should be indexed, remove or adjust the directive. Ensure you’re only allowing pages that you want search engines to index and crawl.

- Update Your Sitemap: Once adjustments are made, update your sitemap to include the formerly blocked pages and submit it to search engines. This action signals search engines to re-crawl and index these pages.

- Monitor the Changes: Use tools like Google Search Console to monitor how these changes affect your site’s indexation status. It may take some time for search engines to process these changes and reflect them in search results.

Addressing blocks in your robots.txt files helps ensure that all valuable content is accessible to search engines, enhancing your site’s potential visibility and ranking.

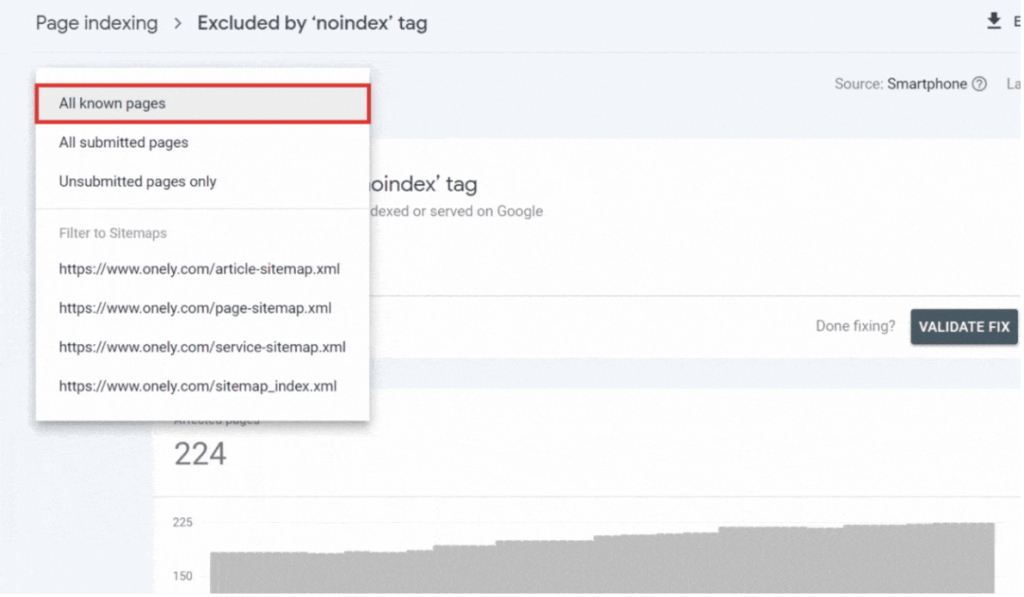

Removing the “Noindex” Tag from Your Pages

The noindex tag is a powerful tool that instructs search engines not to include a specific page in their indexes. While useful for certain parts of your website, incorrectly applied tags can hinder your site’s SEO performance. To remove unwanted noindex tags, follow these steps:

- Identify Pages with Noindex Tags: Use a website crawler tool to scan your site and pinpoint pages with ‘noindex’ directives.

- Remove the Noindex Tags: Edit your website’s HTML code to remove the ‘noindex’ tag from the pages you want indexed. This typically involves deleting a line of code from the section of your web page.

- Submit Pages for Re-indexing: After removing the tags, use tools like Google Search Console to submit the updated pages for indexing. This step is crucial for alerting search engines to re-evaluate these pages.

Correctly managing ‘noindex’ directives ensures that all your valuable content has the opportunity to rank in search engine results. Regularly auditing your website for accidental use of ‘noindex’ tags can prevent potential visibility issues.

Enhancing User Experience to Preempt SEO Glitches

UX design not only enhances the usability of a site but also contributes significantly to improving its search engine rankings. Google, for instance, prioritizes sites that offer seamless, user-friendly experiences. This is where optimizing your site’s Core Web Vitals and solving HTTPS errors become pivotal.

By focusing on elements such as page speed, responsiveness, and secure connections, websites can preempt potential SEO problems. A proactive approach to UX design helps in maintaining higher engagement rates, reducing bounce rates, and eventually, achieving better ranking positions on search engine results pages (SERPs).

Optimizing Core Web Vitals for Better Usability

Optimizing Core Web Vitals is essential for websites aiming to provide the best user experience. These metrics measure the health of a site in terms of loading performance, interactivity, and visual stability. Here are ways to improve these aspects:

- Improve Largest Contentful Paint (LCP): Enhance loading times by optimizing images, leveraging browser caching, and removing unnecessary third-party scripts.

- Reduce First Input Delay (FID): Minimize JavaScript execution time to ensure the site responds quickly to user interactions.

- Minimize Cumulative Layout Shift (CLS): Stabilize layout shifts by specifying size attributes for images and advertisements, and avoiding inserting content above existing content unless in response to a user interaction.

By addressing these factors, websites can greatly improve user satisfaction and performance on both desktop and mobile devices, directly impacting their search engine ranking positively.

Solving HTTPS Errors for a Secure User Experience

Securing a website with HTTPS is not only about encryption and security but also about building trust with your visitors. However, implementing HTTPS can sometimes introduce errors if not done correctly. Common HTTPS-related issues include mixed content errors, expired SSL certificates, and redirect errors.

Here’s how to tackle them:

- Resolve Mixed Content Issues: Ensure that all resources (CSS files, images, scripts, etc.) are loaded over HTTPS to prevent mixed content warnings.

- Regularly Update SSL Certificates: Keep track of your SSL certificate’s expiration dates and renew them promptly to avoid security warnings that can deter visitors.

- Correct Redirect Errors: Properly configure redirects from HTTP to HTTPS URLs to prevent loop errors or incorrect redirect chains that can negatively impact loading times and user experience.

Effectively solving these HTTPS issues ensures a secure, trustworthy environment for users, contributing to a positive perception and higher rankings in search engine results.

By prioritizing user experience through Core Web Vitals optimization and addressing HTTPS errors, websites not only foster a more engaging and secure environment for visitors but also solidify their standing in search engine results, driving more traffic and achieving greater online success.

Manual Actions: The Dreaded Google Penalties

When Google’s team finds that a website doesn’t meet its guidelines, they may impose a manual action, or penalty, against the site. Different from automatic penalties that are software-driven, manual actions are applied by human reviewers. These are serious because they directly impact a site’s visibility in Google search results, potentially leading to decreased traffic and lost revenue.

Understanding Manual Actions and Their Consequences

Manual actions are triggered by various breaches of Google’s Webmaster Guidelines. These breaches might include unnatural linking practices, thin content, deceptive redirects, and more. When a site is penalized, it may be completely removed from search results or demoted.

Beyond the immediate impact on search visibility, manual actions can tarnish a website’s reputation and trustworthiness among users. Receiving a manual action notice in Google Search Console is a clear sign that some aspects of the site need immediate attention and correction.

How to Recover from a Manual Penalty by Google

Recovering from a manual penalty requires a straightforward approach but can be time-consuming. The process generally involves identifying the issue, making corrective actions, and then requesting a review from Google. Here are step-by-step methods for recovery:

- Identify the Issue: Start by reviewing the Manual Actions report in Google Search Console to understand the specific reason(s) for the penalty.

- Corrective Actions: Based on the reason for the penalty, make necessary changes to your site. This may involve removing unnatural links, improving content quality, or ensuring your site is free from hacked content.

- Documentation: Document the changes made as proof of your efforts to comply with Google’s guidelines. This documentation can be helpful during the review process.

- Submit a Reconsideration Request: Once corrections are made, submit a request for review through Google Search Console, providing detailed information on the actions taken to rectify the issues.

- Wait for Review: Google’s team will review your site again, and if satisfied with the corrections, the manual action will be lifted.

Recovering from a manual penalty can be challenging, but by following Google’s guidelines and addressing the specific issues noted, you can restore your site’s ranking and visibility. Regular monitoring of your site’s health through Google Search Console and staying up-to-date with Google’s latest guidelines are proactive measures to avoid future penalties.

Proactive Measures to Avoid Future Google Search Console Glitches

Taking proactive steps is crucial for maintaining the health of your website and avoiding glitches that could hinder your visibility on Google Search Console. Implementing routine checks and updates can prevent many common issues that websites face, ensuring seamless operation and optimal search engine ranking.

Regular Monitoring of Google Search Console Reports

Regularly reviewing your Google Search Console reports is an essential practice for any webmaster aiming to keep their site free from errors and glitches. This tool provides valuable insights into how Google views your site, highlighting areas that require attention, such as crawl errors, mobile usability issues, and security problems.

By keeping an eye on these reports, you can promptly address issues before they impact your site’s performance. This includes fixing broken links, resolving server errors, and making adjustments to improve mobile accessibility, which not only enhances user experience but also contributes to better search engine rankings.

Why Regular Site Audits are Critical

Conducting regular site audits is another cornerstone of maintaining a healthy and effective website. This process involves a thorough examination of your site’s content, structure, and technical setup to identify any issues that could affect your search engine visibility.

Regular audits help uncover hidden problems like duplicate content, slow page load times, and missing alt tags which, if left unaddressed, can lead to Google Search Console glitches. Tools such as Semrush provide comprehensive audits, offering actionable insights to improve your site’s SEO performance. This proactive step ensures that your website remains in line with best practices, providing a solid foundation for your online presence.

The Importance of Keeping Up with Google’s Evolving Algorithms

Google’s algorithms are continuously evolving, reflecting the company’s commitment to delivering the best search results to its users. For website owners and SEO professionals, keeping abreast of these changes is paramount. Understanding the latest updates and how they influence search engine rankings allows you to adjust your SEO strategies accordingly.

This might involve updating your content, making technical improvements to your site, or enhancing user experience (UX). By staying informed and adaptable, you can mitigate potential issues that might arise from algorithm updates, thereby minimizing the risk of errors in your Google Search Console and securing your site’s visibility in search results.

Concluding Thoughts on Managing Google Search Console Glitches

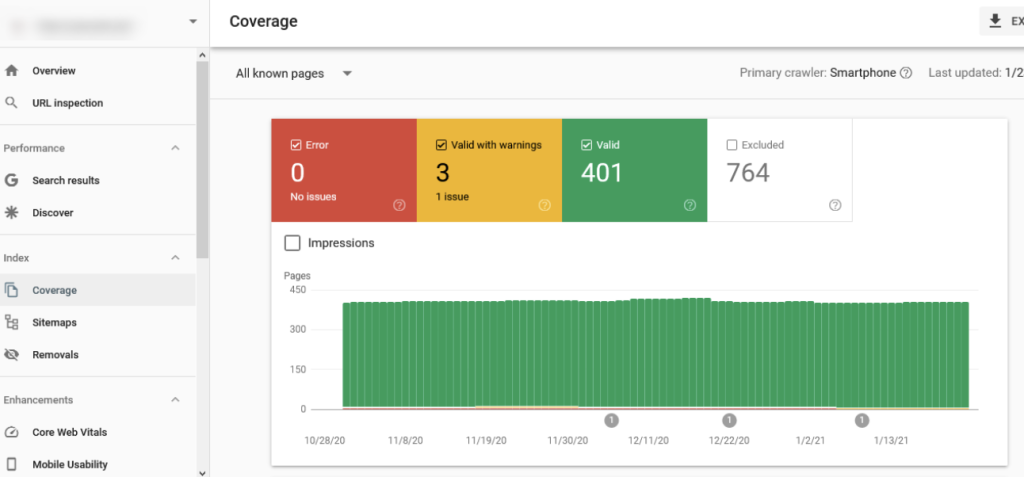

Navigating the maze of Google Search Console (GSC) errors can feel overwhelming, but it’s a critical step toward ensuring your website’s smooth operation and visibility online. Each error, from crawl issues to indexing mishaps, acts as a hurdle in your site’s journey to achieve its potential in search rankings. Nonetheless, by adopting a strategic approach to identify, understand, and rectify these errors, you can pave a clear path for Googlebot to access, crawl, and index your site efficiently.

First and foremost, familiarize yourself with the GSC Index Coverage Report:

This powerful tool sheds light on the status of your web pages from Google’s perspective. By analyzing the report’s findings, you can pinpoint the exact nature of issues affecting your site’s performance.

Whether it’s a server error that’s preventing Googlebot from accessing your site or misplaced ‘noindex’ tags that are keeping pages out of the index, each problem has a solution. For instance, ensuring your server is correctly configured to handle requests can address accessibility issues, while reviewing and correcting your robots.txt file and meta tags can lift restrictions that keep valuable pages out of Google’s view.

Moreover, the importance of responsive web design and fast page loading times cannot be overstated. These factors not only affect your site’s usability and visitor satisfaction but also play a significant role in how well your site performs in search results. By prioritizing mobile-friendliness and optimizing for core web vitals, you make your site more appealing not just to users but to search engines as well.

However, it’s essential to recognize that not all errors flagged by Google necessitate immediate fixing. Some might be intentional (such as the use of ‘noindex’ tags on certain pages) or irrelevant to your site’s overall SEO strategy. Concentrate your efforts on rectifying errors that have a direct impact on your site’s ability to rank and attract organic traffic. Creating high-quality, original content should always be at the heart of your strategy, as it is a surefire way to encourage Google to index your pages and serve them to users.

In sum, while GSC errors can be daunting, they are not insurmountable. With a clear understanding of the issues at hand, a focused approach to resolving them, and the right tools, you can ensure your site remains accessible, secure, and primed for search engine success.